Learn from your fellow PHP developers with our PHP blogs, or help share the knowledge you've gained by writing your own.

composer create-project laravel/laravel ci-showcase

.gitignore file:.idea

vendor

.env

.env file:APP_ENV=development

php artisan key:generate, and then I wanted to verify that the primary setup works as expected: ./vendor/bin/phpunit, which produced the output OK (2 tests, 2 assertions). Nice, time to commit this: git commit && git pushAt this point, we don't yet have any CI, let's do something about it!php -l my-file.php. This is what we're going to use. Because the php -l command doesn't support multiple files as arguments, I've written a small wrapper shell script and saved it to ci/linter.sh:#!/bin/sh

files=<code>sh ci/get-changed-php-files.sh | xargs</code>last_status=0

status=0

# Loop through changed PHP files and run php -l on each

for f in "$files" ; do message=<code>php -l $f</code> last_status="$?" if [ "$last_status" -ne "0" ]; then # Anything fails -> the whole thing fails echo "PHP Linter is not happy about $f: $message" status="$last_status" fi

done

if [ "$status" -ne "0" ]; then echo "PHP syntax validation failed!"

fi

exit $status

ci/get-changed-php-files.sh:#!/bin/sh

# What's happening here?

#

# 1. We get names and statuses of files that differ in current branch from their state in origin/master.

# These come in form (multiline)

# 2. The output from git diff is filtered by unix grep utility, we only need files with names ending in .php

# 3. One more filter: filter *out* (grep -v) all lines starting with R or D.

# D means "deleted", R means "renamed"

# 4. The filtered status-name list is passed on to awk command, which is instructed to take only the 2nd part

# of every line, thus just the filename

git diff --name-status origin/master | grep '\.php$' | grep -v "^[RD]" | awk '{ print }'

.gitlab-ci.yml. This is where your pipeline is declared using YAML notation:# we're using this beautiful tool for our pipeline: https://github.com/jakzal/phpqa

image: jakzal/phpqa:alpine

# For this sample pipeline, we'll only have 1 stage, in real-world you would like to also add at least "deploy"

stages: - QA

linter:

stage: QA

# this is the main part: what is actually executed

script: - sh ci/get-changed-php-files.sh | xargs sh ci/linter.sh

image: jakzal/phpqa:alpine and it's telling Gitlab that we want to run our pipeline using a PHP-QA utility by jakzal. It is a docker image containing PHP and a huge variety of QA-tools. We declare one stage - QA, and this stage by now has just a single job named linter. Every job can have it's own docker image, but we don't need that for the purpose of this tutorial. Our project reaches Step 2. Once I had pushed these changes, I immediately went to the project's CI/CD page. Aaaand.... the pipeline was already running! I clicked on the linter job and saw the following happy green output:Running with gitlab-runner 11.9.0-rc2 (227934c0) on docker-auto-scale ed2dce3a

Using Docker executor with image jakzal/phpqa:alpine ...

Pulling docker image jakzal/phpqa:alpine ...

Using docker image sha256:12bab06185e59387a4bf9f6054e0de9e0d5394ef6400718332c272be8956218f for jakzal/phpqa:alpine ...

Running on runner-ed2dce3a-project-11318734-concurrent-0 via runner-ed2dce3a-srm-1552606379-07370f92...

Initialized empty Git repository in /builds/crocodile2u/ci-showcase/.git/

Fetching changes...

Created fresh repository.

From https://gitlab.com/crocodile2u/ci-showcase * [new branch] master -> origin/master * [new branch] step-1 -> origin/step-1 * [new branch] step-2 -> origin/step-2

Checking out 1651a4e3 as step-2...

Skipping Git submodules setup

$ sh ci/get-changed-php-files.sh | xargs sh ci/linter.sh

Job succeeded

phpcs.xml:<?xml version="1.0"?>

/resources

.gitlab-ci.yml:code-style: stage: QA script: # Variable $files will contain the list of PHP files that have changes - files=<code>sh ci/get-changed-php-files.sh</code> # If this list is not empty, we execute the phpcs command on all of them - if [ ! -z "$files" ]; then echo $files | xargs phpcs; fi

phpcs utility. That's it, Step 3 is finished! If you go to see the pipeline now, you will notice that linter and code-style jobs run in parallel.vendor folder with composer. You might have noticed that our .gitignore file has vendor folder as one of it entries, which means that it is not managed by the Version Control System. Some prefer their dependencies to be part of their Git repository, I prefer to have only the composer.json declarations in Git. Makes the repo much much smaller than the other way round, also makes it easy to avoid bloating your production builds with libraries only needed for development..gitlab-ci.yml:test: stage: QA cache: key: dependencies-including-dev paths: - vendor/ script: - composer install - ./vendor/bin/phpunit

composer create-project to create our project boilerplate.laravel/laravel package has a lot of things included in it, and phpunit.xml is also one of them. All I had to do was to add another line to it:xml

php artisan key:generate.git commit & git push, and we have all three jobs on theQA stage!$ ci/linter.sh

PHP Linter is not happy about app/User.php:

Parse error: syntax error, unexpected 'syntax' (T_STRING), expecting function (T_FUNCTION) or const (T_CONST) in app/User.php on line 11

Errors parsing app/User.php

PHP syntax validation failed!

ERROR: Job failed: exit code 255

$ if [ ! -z "$files" ]; then echo $files | xargs phpcs; fi

FILE: ...ilds/crocodile2u/ci-showcase/app/Http/Controllers/Controller.php

----------------------------------------------------------------------

FOUND 0 ERRORS AND 1 WARNING AFFECTING 1 LINE

---------------------------------------------------------------------- 13 | WARNING | Line exceeds 120 characters; contains 129 characters

----------------------------------------------------------------------

Time: 39ms; Memory: 6MB

ERROR: Job failed: exit code 123

$ ./vendor/bin/phpunit

PHPUnit 7.5.6 by Sebastian Bergmann and contributors.

F. 2 / 2 (100%)

Time: 102 ms, Memory: 14.00 MB

There was 1 failure:

1) Tests\Unit\ExampleTest::testBasicTest

This test is now failing

Failed asserting that false is true.

/builds/crocodile2u/ci-showcase/tests/Unit/ExampleTest.php:17

FAILURES!

Tests: 2, Assertions: 2, Failures: 1.

ERROR: Job failed: exit code 1

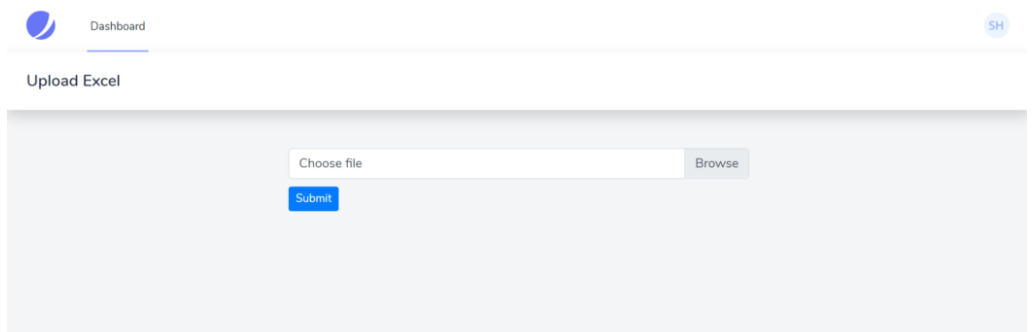

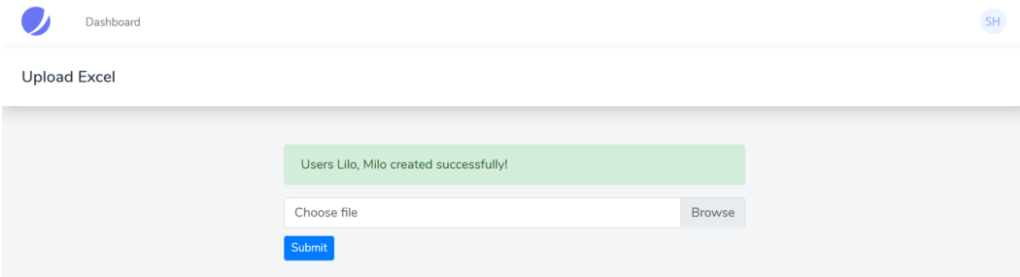

<form method="post" enctype="multipart/form-data"> @csrf <div class="custom-file"> <input type="file" accept=".csv" name="excel" class="custom-file-input" id="customFile" /> <label class="custom-file-label" for="customFile">Choose file</label > </div> <div> <button type="submit" class="btn btn-primary btn-sm" style="margin-top: 10px" >Submit> </div>

</form>

php artisan make:controller UploadController

Route::post('/upload', [UploadController::class, 'upload'])->name('upload')->middleware('auth');

<form method="post" action="{{route('upload')}}" enctype="multipart/form-data">

$file = $request->file('excel');

if (($handle = fopen($file, "r")) !== FALSE) { while (($data = fgetcsv($handle, 1000, ",")) !== FALSE) { ..... }

}

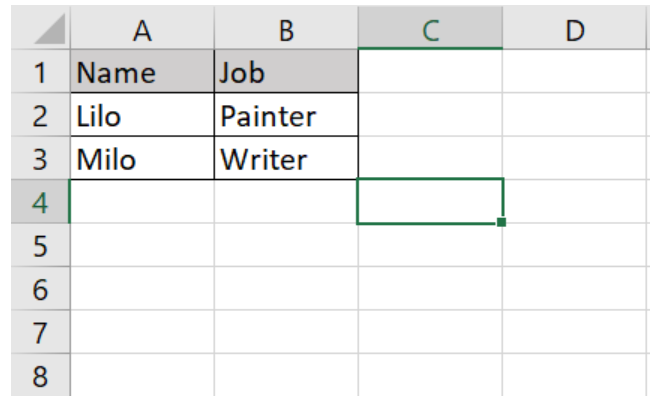

{ "name": "test", "job": "test"

}

if (($handle = fopen($file, "r")) !== FALSE) { while (($data = fgetcsv($handle, 1000, ",")) !== FALSE) { Http::post('https://reqres.in/api/users', [ 'name' => $data[0], 'job' => $data[1], ]); }

}

public function upload(Request $request){ $file = $request->file('excel'); if($file){ $row = 1; $array = []; if (($handle = fopen($file, "r")) !== FALSE) { while (($data = fgetcsv($handle, 1000, ",")) !== FALSE) { if($row > 1){ Http::post('https://reqres.in/api/users', [ 'name' => $data[0], 'job' => $data[1], ]); array_push($array,$data[0]); } $request->session()->flash('status', 'Users '.implode($array,", ").' created successfully!'); $row++; } } }else{ $request->session()->flash('error', 'Please choose a file to submit.'); } return view('dashboard');

}

<div class="container max-w-7xl mx-auto sm:px-6 lg:px-8" style="width: 50%"> @if (session('status')) <div class="alert alert-success"> {{ session('status') }} </div> @endif @if (session('error')) <div class="alert alert-error"> {{ session('error') }} </div> @endif <form action="{{route('upload')}}" method="post" enctype="multipart/form-data"> @csrf <div class="custom-file"> <input type="file" accept=".csv" name="excel" class="custom-file-input" id="customFile" /> <label class="custom-file-label" for="customFile">Choose file</label> </div> <div> <button type="submit" class="btn btn-primary btn-sm" style="margin-top: 10px">Submit</button> </div> </form>

</div>

g10dra

g10dra harikrishnanr

harikrishnanr calevans

calevans MindNovae

MindNovae dmamontov

dmamontov tanja

tanja damnjan

damnjan ahmedkhan

ahmedkhan