What is a Daemon?

The term daemon was coined by the programmers of Project MAC at MIT. It is inspired on Maxwell's demon in charge of sorting molecules in the background. The UNIX systems adopted this terminology for daemon programs.

It also refers to a character from Greek mythology that performs the tasks for which the gods do not want to take. As stated in the "Reference System Administrator UNIX", in ancient Greece, the concept of "personal daemon" was, in part, comparable to the modern concept of "guardian angel." BSD family of operating systems use the image as a demon's logo.

Daemons are usually started at machine boot time. In the technical sense, a demon is considered a process that does not have a controlling terminal, and accordingly there is no user interface. Most often, the ancestor process of the deamon is init - process root on UNIX, although many daemons run from special rcd scripts started from a terminal console.

Richard Stevenson describes the following steps for writing daemons:

. Resetting the file mode creation mask to 0 function umask(), to mask some bits of access rights from the starting process.

. Cause fork() and finish the parent process. This is done so that if the process was launched as a group, the shell believes that the group finished at the same time, the child inherits the process group ID of the parent and gets its own process ID. This ensures that it will not become process group leader.

. Create a new session by calling setsid(). The process becomes a leader of the new session, the leader of a new group of processes and loses the control of the terminal.

. Make the root directory of the current working directory as the current directory will be mounted.

. Close all file descriptors.

. Make redirect descriptors 0,1 and 2 (STDIN, STDOUT and STDERR) to /dev/null or files /var/log/project_name.out because some standard library functions use these descriptors.

. Record the pid (process ID number) in the pid-file: /var/run/projectname.pid.

. Correctly process the signals and SigTerm SigHup: end with the destruction of all child processes and pid - files and / or re-configuration.

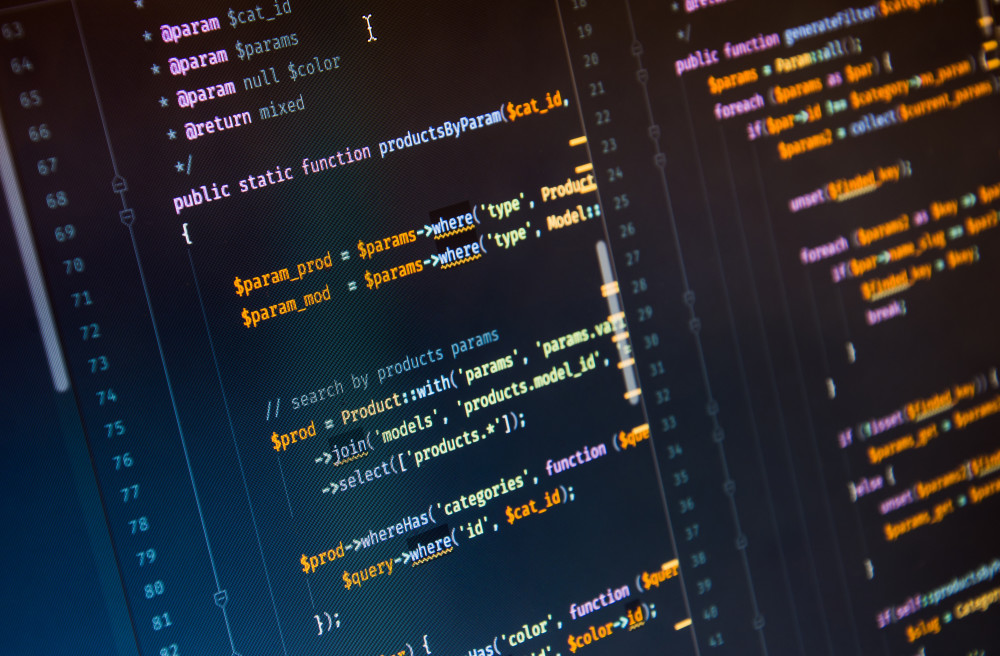

How to Create Daemons in PHP

To create demons in PHP you need to use the extensions pcntl and posix. To implement the fast communication withing daemon scripts it is recommended to use the extension libevent for asynchronous I/O.

Lets take a closer look at the code to start a daemon:

umask(0);

$pid = pcntl_fork();

if ($pid < 0) {

print('fork failed');

exit 1;

}

After a fork, the execution of the program works as if there are two branches of the code, one for the parent process and the second for the child process. What distinguishes these two processes is the result value returned the fork() function call. The parent process ID receives the newly created process number and the child process receives a 0.

if ($pid > 0) { echo "daemon process started

";

exit;

}

$sid = posix_setsid();

if ($sid < 0) {

exit 2;

}

chdir('/');

file_put_contents($pidFilename, getmypid() );

run_process();

The implementation of step 5 "to close all file descriptors" can be done in two ways. Well, closing all file descriptors is difficult to implement in PHP. You just need to open any file descriptors before fork(). Second, you can override the standard output to an error log file using init_set() or use buffering using ob_start() to a variable and store it in log file:

ob_start();

var_dump($some_object);

$content = ob_get_clean();

fwrite($fd_log, $content);

Typically, ob_start() is the start of the daemon life cycle and ob_get_clean() and fwrite() calls are the end. However, you can directly override STDIN, STDOUT and STDERR:

ini_set('error_log', $logDir.'/error.log');

fclose(STDIN);

fclose(STDOUT);

fclose(STDERR);

$STDIN = fopen('/dev/null', 'r');

$STDOUT = fopen($logDir.'/application.log', 'ab');

$STDERR = fopen($logDir.'/application.error.log', 'ab');

Now, our process is disconnected from the terminal and the standard output is redirected to a log file.

Handling Signals

Signal processing is carried out with the handlers that you can use either via the library pcntl (pcntl_signal_dispatch()), or by using libevent. In the first case, you must define a signal handler:

function sig_handler($signo)

{

global $fd_log;

switch ($signo) {

case SIGTERM:

fclose($fd_log); unlink($pidfile); exit;

break;

case SIGHUP:

init_data(); break;

default:

}

}

pcntl_signal(SIGTERM, "sig_handler");

pcntl_signal(SIGHUP, "sig_handler");

Note that signals are only processed when the process is in an active mode. Signals received when the process is waiting for input or in sleep mode will not be processed. Use the wait function pcntl_signal_dispatch(). We can ignore the signal using flag SIG_IGN: pcntl_signal(SIGHUP, SIG_IGN); Or, if necessary, restore the signal handler using the flag SIG_DFL, which was previously installed by default: pcntl_signal(SIGHUP, SIG_DFL);

Asynchronous I/O with Libevent

In the case you use blocking input / output signal processing is not applied. It is recommended to use the library libevent which provides non-blocking as input / output, processing signals, and timers. Libevent library provides a simple mechanism to start the callback functions for events on file descriptor: Write, Read, Timeout, Signal.

Initially, you have to declare one or more events with an handler (callback function) and attach them to the basic context of the events:

$base = event_base_new();

$event = event_new();

$errno = 0;

$errstr = '';

$socket = stream_socket_server("tcp://$IP:$port", $errno, $errstr);

stream_set_blocking($socket, 0);

event_set($event, $socket, EV_READ | EV_PERSIST, 'onAccept', $base);

Function handlers 'onRead', 'onWrite', 'onError' must implement the processing logic. Data is written into the buffer, which is obtained in the non-blocking mode:

function onRead($buffer, $id)

{

while($read = event_buffer_read($buffer, 256)) {

var_dump($read);

}

}

The main event loop runs with the function event_base_loop($base);. With a few lines of code, you can exit the handler only by calling: event_base_loobreak(); or after the specified time (timeout) event_loop_exit();.

Error handling deals with failure Events:

function onError($buffer, $error, $id)

{

global $id, $buffers, $ctx_connections;

event_buffer_disable($buffers[$id], EV_READ | EV_WRITE);

event_buffer_free($buffers[$id]);

fclose($ctx_connections[$id]);

unset($buffers[$id], $ctx_connections[$id]);

}

It should be noted the following subtlety: Working with timers is only possible through the file descriptor. The example of official the documentation does not work. Here is an example of processing that runs at regular intervals.

$event2 = event_new();

$tmpfile = tmpfile();

event_set($event2, $tmpfile, 0, 'onTimer', $interval);

$res = event_base_set($event2, $base);

event_add($event2, 1000000 * $interval);

With this code we can have a working timer finishes only once. If we need a "permanent" Timer, using the function onTimer we need create a new event each time, and reassign it to process through a "period of time":

function onTimer($tmpfile, $flag, $interval)

{

$global $base, $event2;

if ($event2) {

event_delete($event2);

event_free($event2);

}

call_user_function(‘process_data’,$args);

$event2 = event_new();

event_set($event2, $tmpfile, 0, 'onTimer', $interval);

$res = event_base_set($event2, $base);

event_add($event2, 1000000 * $interval);

}

At the end of the daemon we must release all previously allocated resources:

event_delete($event);

event_free($event);

event_base_free($base);

event_base_set($event, $base);

event_add($event);

Also it should be noted that for the signal processing handler is set the flag EV_SIGNAL: event_set($event, SIGHUP, EV_SIGNAL, 'onSignal', $base);

If needed constant signal processing, it is necessary to set a flag EV_PERSIST. Here follows a handler for the event onAccept, which occurs when a new connection is a accepted on a file descriptor:

function onAccept($socket, $flag, $base) {

global $id, $buffers, $ctx_connections;

$id++;

$connection = stream_socket_accept($socket);

stream_set_blocking($connection, 0);

$buffer = event_buffer_new($connection, 'onRead', NULL, 'onError', $id);

event_buffer_base_set($buffer, $base);

event_buffer_timeout_set($buffer, 30, 30);

event_buffer_watermark_set($buffer, EV_READ, 0, 0xffffff); event_buffer_priority_set($buffer, 10); event_buffer_enable($buffer, EV_READ | EV_PERSIST); $ctx_connections[$id] = $connection;

$buffers[$id] = $buffer;

}

Monitoring a Daemon

It is good practice to develop the application so that it was possible to monitor the daemon process. Key indicators for monitoring are the number of items processed / requests in the time interval, the speed of processing with queries, the average time to process a single request or downtime.

With the help of these metrics can be understood workload of our demon, and if it does not cope with the load it gets, you can run another process in parallel, or for running multiple child processes.

To determine these variables need to check these features at regular intervals, such as once per second. For example downtime is calculated as the difference between the measurement interval and total time daemon.

Typically downtime is determined as a percentage of a measurement interval. For example, if in one second were executed 10 cycles with a total processing time of 50ms, the time will be 950ms or 95%.

Query performance wile be 10rps (request per second). Average processing time of one request: the ratio of the total time spent on processing requests to the number of requests processed, will be 5ms.

These characteristics, as well as additional features such as memory stack size queue, number of transactions, the average time to access the database, and so on.

An external monitor can be obtain data through a TCP connection or unix socket, usually in the format of Nagios or zabbix, depending on the monitoring system. To do this, the demon should use an additional system port.

As mentioned above, if one worker process can not handle the load, usually we run in parallel multiple processes. Starting a parallel process should be done by the parent master process that uses fork() to launch a series of child processes.

Why not run processes using exec() or system()? Because, as a rule, you must have direct control over the master and child processes. In this case, we can handle it via interaction signals. If you use the exec command or system, then launch the initial interpreter, and it has already started processes that are not direct descendants of the parent process.

Also, there is a misconception that you can make a demon process through command nohup. Yes, it is possible to issue a command: nohup php mydaemon.php -master >> /var/log/daemon.log 2 >> /var/log/daemon.error.log &

But, in this case, would be difficult to perform log rotation, as nohup "captures" file descriptors for STDOUT / STDERR and release them only at the end of the command, which may overload of the process or the entire server. Overload demon process may affect the integrity of data processing and possibly cause partial loss of some data.

Starting a Daemon

Starting the daemon must happen either automatically at boot time, or with the help of a "boot script."

All startup scripts are usually in the directory /etc/rc.d. The startup script in the directory service is made /etc/init.d/ . Run command start service myapp or start group /etc/init.d/myapp depending on the type of OS.

Here is a sample script text:

#! /bin/sh

#

$appdir = /usr/share/myapp/app.php

$parms = --master –proc=8 --daemon

export $appdir

export $parms

if [ ! -x appdir ]; then

exit 1

fi

if [ -x /etc/rc.d/init.d/functions ]; then

. /etc/rc.d/init.d/functions

fi

RETVAL=0

start () {

echo "Starting app"

daemon /usr/bin/php $appdir $parms

RETVAL=$?

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/mydaemon

echo

return $RETVAL

}

stop () {

echo -n "Stopping $prog: "

killproc /usr/bin/fetchmail

RETVAL=$?

[ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/mydaemon

echo

return $RETVAL

}

case in

start)

start

;;

stop)

stop

;;

restart)

stop

start

;;

status)

status /usr/bin/mydaemon

;;

*)

echo "Usage:

php

if (is_file('app.phar')) {

unlink('app.phar');

}

$phar = new Phar('app.phar', 0, 'app.phar');

$phar->compressFiles(Phar::GZ);

$phar->setSignatureAlgorithm (Phar::SHA1);

$files = array();

$files['bootstrap.php'] = './bootstrap.php';

$rd = new RecursiveIteratorIterator(new RecursiveDirectoryIterator('.'));

foreach($rd as $file){

if ($file->getFilename() != '..' && $file->getFilename() != '.' && $file->getFilename() != __FILE__) {

if ( $file->getPath() != './log'&& $file->getPath() != './script'&& $file->getPath() != '.')

$files[substr($file->getPath().DIRECTORY_SEPARATOR.$file->getFilename(),2)] =

$file->getPath().DIRECTORY_SEPARATOR.$file->getFilename();

}

}

if (isset($opt['version'])) {

$version = $opt['version'];

$file = "buildFromIterator(new ArrayIterator($files));

$phar->setStub($phar->createDefaultStub('bootstrap.php'));

$phar = null;

}

{start|stop|restart|status}"

;;

RETVAL=$?

exit $RETVAL

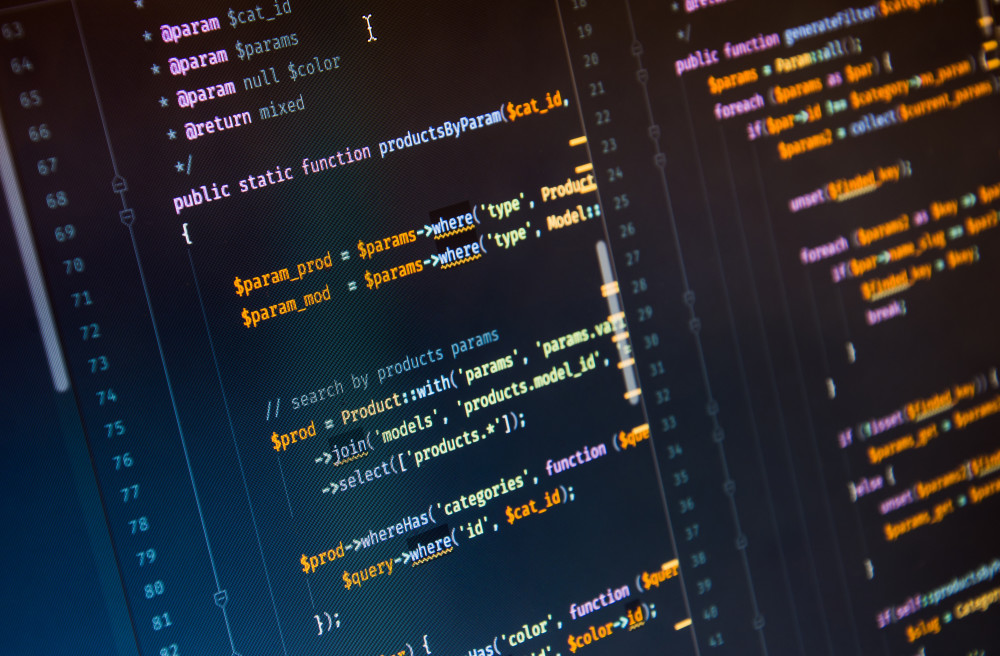

Distributing Your PHP Daemon

To distribute a daemon it is better to pack it in a single phar archive module. The assembled module should include all the necessary PHP and .ini files.

Below is a sample build script:

#php app.phar

myDaemon version 0.1 Debug

usage:

--daemon – run as daemon

--debug – run in debug mode

--settings – print settings

--nofork – not run child processes

--check – check dependency modules

--master – run as master

--proc=[8] – run child processes

Additionally, it may be advisable to make a PEAR package as a standard unix-console utility that when run with no arguments prints its own usage instruction:

[NMD%%CODE%%]

Conclusion

Creating daemons in PHP it is not hard but to make them run correctly it is important to follow the steps described in this article.

Post a comment here if you have questions or comments on how to create daemon services in PHP.

g10dra

g10dra mike

mike crocodile2u

crocodile2u calevans

calevans MindNovae

MindNovae HowTos

HowTos tanja

tanja