Learn from your fellow PHP developers with our PHP blogs, or help share the knowledge you've gained by writing your own.

$ composer require monolog/monolog$ composer require monolog/monolog:1.18.0$ composer require monolog/monolog:>1.18.0$ composer require monolog/monolog:~1.18.0$ composer require monolog/monolog:^1.18.0$ composer global require "phpunit/phpunit:^5.3.*"$ composer update$ composer update monolog/monolog4: Don’t install dev dependenciesIn a lot of projects I am working on, I want to make sure that the libraries I download and install are working before I start working with them. To this end, many packages will include things like Unit Tests and documentation. This way I can run the unit Tests on my own to validate the package first. This is all fine and good, except when I don’t want them. There are times when I know the package well enough, or have used it enough, to not have to bother with any of that.5: Optimize your autoloadRegardless of whether you --prefer-dist or --prefer-source, when your package is incorporated into your project with require, it just adds it to the end of your autoloader. This isn’t always the best solution. Therefore Composer gives us the option to optimize the autoloader with the --optimize switch. Optimizing your autoloader converts your entire autoloader into classmaps. Instead of the autoloader having to use file_exists() to locate a file, Composer creates an array of file locations for each class. This can speed up your application by as much as 30%.$ composer dump-autoload --optimize$ composer require monolog/monolog:~1.18.0 -o

composer require firebase/php-jwt require_once('vendor/autoload.php');

use \Firebase\JWT\JWT; private {

$payload = array(

'iss' => $_SERVER['HOST_NAME'],

'exp' => time()+600, 'uId' => $UiD

);

try{

$jwt = JWT::encode($payload, $this->Secret_Key,'HS256'); $res=array("status"=>true,"Token"=>$jwt);

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

$return['status']=1;

$return['_data_']=$UserData[0];

$return['message']='User Logged in Successfully.';

$jwt=$obj->generateToken($UserData[0]['id']);

if($jwt['status']==true)

{

$return['JWT']=$jwt['Token'];

}

else{

unset($return['_data_']);

$return['status']=0;

$return['message']='Error:'.$jwt['Error'];

}

UserBlogs public function Authenticate($JWT,$Curret_User_id)

{

try {

$decoded = JWT::decode($JWT,$this->Secret_Key, array('HS256'));

$payload = json_decode(json_encode($decoded),true);

if($payload['uId'] == $Curret_User_id) {

$res=array("status"=>true);

}else{

$res=array("status"=>false,"Error"=>"Invalid Token or Token Exipred, So Please login Again!");

}

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

UserBlogsUserBlogs if(isset($_POST['Uid']))

{

$resp=$obj->Authenticate($_POST['JWT'],$_POST['Uid']);

if($resp['status']==false)

{

$return['status']=0;

$return['message']='Error:'.$resp['Error'];

}

else{

$blogs=$obj->get_all_blogs($_POST['Uid']);

if(count($blogs)>0)

{

$return['status']=1;

$return['_data_']=$blogs;

$return['message']='Success.';

}

else

{

$return['status']=0;

$return['message']='Error:Invalid UserId!';

}

}

}

else

{

$return['status']=0;

$return['message']='Error:User Id not provided!';

}

<?php

header("Content-Type: application/json; charset=UTF-8");

require_once('vendor/autoload.php');

use \Firebase\JWT\JWT;

class DBClass {

private $host = "localhost";

private $username = "root";

private $password = ""; private $database = "news";

public $connection;

private $Secret_Key="*$%43MVKJTKMN$#";

public function connect(){

$this->connection = null;

try{

$this->connection = new PDO("mysql:host=" . $this->host . ";dbname=" . $this->database, $this->username, $this->password);

$this->connection->exec("set names utf8");

}catch(PDOException $exception){

echo "Error: " . $exception->getMessage();

}

return $this->connection;

}

public function login($email,$password){

if($this->connection==null)

{

$this->connect();

}

$query = "SELECT id,name,email,createdAt,updatedAt from users where email= ? and password= ?";

$stmt = $this->connection->prepare($query);

$stmt->execute(array($email,md5($password)));

$ret= $stmt->fetchAll(PDO::FETCH_ASSOC);

return $ret;

}

public function get_all_blogs($Uid){

if($this->connection==null)

{

$this->connect();

}

$query = "SELECT b.*,u.id as Uid,u.email as Uemail,u.name as Uname from blogs b join users u on u.id=b.user_id where b.user_id= ?";

$stmt = $this->connection->prepare($query);

$stmt->execute(array($Uid));

$ret= $stmt->fetchAll(PDO::FETCH_ASSOC);

return $ret;

}

public function response($array)

{

echo json_encode($array);

exit;

}

public function generateToken($UiD)

{

$payload = array(

'iss' => $_SERVER['HOST_NAME'],

'exp' => time()+600, 'uId' => $UiD

);

try{

$jwt = JWT::encode($payload, $this->Secret_Key,'HS256'); $res=array("status"=>true,"Token"=>$jwt);

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

public function Authenticate($JWT,$Current_User_id)

{

try {

$decoded = JWT::decode($JWT,$this->Secret_Key, array('HS256'));

$payload = json_decode(json_encode($decoded),true);

if($payload['uId'] == $Current_User_id) {

$res=array("status"=>true);

}else{

$res=array("status"=>false,"Error"=>"Invalid Token or Token Exipred, So Please login Again!");

}

}catch (UnexpectedValueException $e) {

$res=array("status"=>false,"Error"=>$e->getMessage());

}

return $res;

}

}

$return=array();

$obj = new DBClass();

if(isset($_GET['action']) && $_GET['action']!='')

{

if($_GET['action']=="login")

{

if(isset($_POST['email']) && isset($_POST['password']))

{

$UserData=$obj->login($_POST['email'],$_POST['password']);

if(count($UserData)>0)

{

$return['status']=1;

$return['_data_']=$UserData[0];

$return['message']='User Logged in Successfully.';

$jwt=$obj->generateToken($UserData[0]['id']);

if($jwt['status']==true)

{

$return['JWT']=$jwt['Token'];

}

else{

unset($return['_data_']);

$return['status']=0;

$return['message']='Error:'.$jwt['Error'];

}

}

else

{

$return['status']=0;

$return['message']='Error:Invalid Email or Password!';

}

}

else

{

$return['status']=0;

$return['message']='Error:Email or Password not provided!';

}

}

elseif($_GET['action']=="UserBlogs")

{

if(isset($_POST['Uid']))

{

$resp=$obj->Authenticate($_POST['JWT'],$_POST['Uid']);

if($resp['status']==false)

{

$return['status']=0;

$return['message']='Error:'.$resp['Error'];

}

else{

$blogs=$obj->get_all_blogs($_POST['Uid']);

if(count($blogs)>0)

{

$return['status']=1;

$return['_data_']=$blogs;

$return['message']='Success.';

}

else

{

$return['status']=0;

$return['message']='Error:Invalid UserId!';

}

}

}

else

{

$return['status']=0;

$return['message']='Error:User Id not provided!';

}

}

}

else

{

$return['status']=0;

$return['message']='Error:Action not provided!';

}

$obj->response($return);

$obj->connection=null;

?>

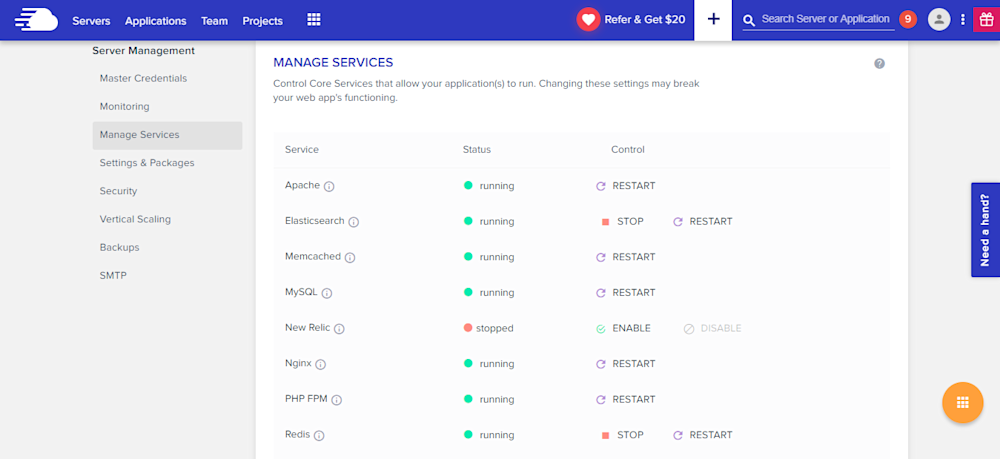

sudo apt-get update

sudo apt-get install unzip

sudo apt-get install libwww-perl libdatetime-perl

curl https://aws-cloudwatch.s3.amazonaws.com/downloads/CloudWatchMonitoringScripts-1.2.2.zip -O

unzip CloudWatchMonitoringScripts-1.2.2.zip && \

rm CloudWatchMonitoringScripts-1.2.2.zip && \

cd aws-scripts-mon

cloudwatch:GetMetricStatistics

cloudwatch:PutMetricData

ec2:DescribeTags

cloudwatch:ListMetrics

cp awscreds.template awscreds.conf

AWSAccessKeyId = my_access_key_id

AWSSecretKey = my_secret_access_key

./mon-put-instance-data.pl --mem-util --verify --verbose

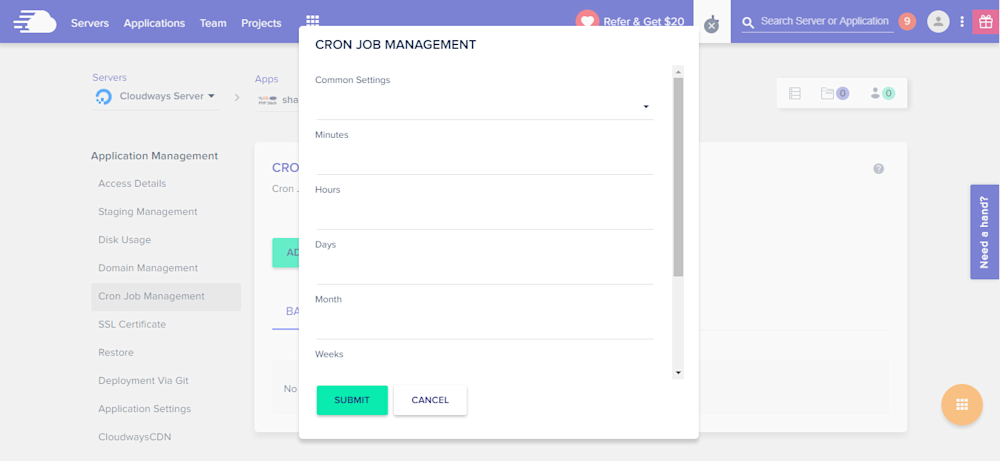

crontab -e

*/5 * * * * ~/STORAGE/cloudwatch/aws-scripts-mon/mon-put-instance-data.pl --mem-util --mem-avail --mem-used --disk-space-util --disk-space-avail --disk-space-used --disk-path=/ --disk-path=/STORAGE --from-cron

--disk-path=/ --disk-path=/home

dompdf package for generating the PDF file. barryvdh/laravel-dompdf using composer package and thereafter we will add new route url with controller. Then we will create a blade file. Then after we have to just run project with serve and we can check the PDF file is for download. dompdf. To get started, we need to download fresh Laravel 5.7 application using command, so open our terminal and run the below command in the command prompt: composer create-project --prefer-dist laravel/laravel blog

composer require barryvdh/laravel-dompdf

'providers' => [

....

Barryvdh\DomPDF\ServiceProvider::class,

],

'aliases' => [

....

'PDF' => Barryvdh\DomPDF\Facade::class,

]

Route::get('demo-generate-pdf','HomeController@demoGeneratePDF');

generatePDF() method of route. <?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

use PDF;

class HomeController extends Controller

{

public function demoGeneratePDF()

{

$data = ['title' => 'Welcome to My Blog'];

$pdf = PDF::loadView('myPDF', $data);

return $pdf->download('demo.pdf');

}

}

<!DOCTYPE html>

<html>

<head>

<title>Hi</title>

</head>

<body>

<h1>Welcome to My BLOG - {{ $title }}</h1>

<p>Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod

tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam,

quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo

consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse

cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non

proident, sunt in culpa qui officia deserunt mollit anim id est laborum.</p>

</body>

</html>

php artisan serve

crocodile2u

crocodile2u MindNovae

MindNovae dmamontov

dmamontov tanja

tanja damnjan

damnjan ahmedkhan

ahmedkhan